PyMuPDF-Layout: 10× Faster PDF Parsing Without GPUs

November 6, 2025

The Origin

Last September, our colleagues from Europe and Asia (You'll find Artifex team members working from almost every timezone) gathered in a small coastal town 70 miles north of our San Francisco HQ. As the fog rolled in, we discussed just one topic for an entire week: How can we make use of our 35 years of knowledge in document processing, especially unstructured formats like PDF, and combine it with cutting-edge AI to provide the best imaginable data parsing solution for our users?

Artifex already provides a popular parsing solution with the PyMuPDF family (including PyMuPDF4LLM, specialized for markdown output). We have notable customers like Mistral AI, Harvey AI, DocuSign, and Oracle utilizing our solutions, but we've noticed that the space is rapidly evolving with the AI revolution. For parsing, the most interesting approach is using VLMs (Vision Language Models). In simple terms, it means ignoring the format of PDF, converting it to an image file, and processing to extract data using Vision Transformer architecture.

VLMs are an innovative approach for sure, but there is one problem: high computational cost. They eat up significant GPU resources, naturally making the process slow and costly. Maybe a new startup with dozens of millions in funding from VCs could absorb the cost, with valuation easily bubbling up without much profit. But here's what most teams miss: they have strong ML capabilities but lack deep expertise in documents themselves. They're solving a document problem by throwing away document structure and treating PDFs as mere bitmaps. We thought we could approach it differently by leveraging what we actually know, 35 years of PDF expertise combined with modern ML. We believe you need both capabilities to truly solve this problem.

What if our native extraction solution, relying on heuristic algorithms, could help train our own ML model without relying on GPU? Wouldn't this truly hybrid approach catch both speed and accuracy?

What excited us the most was the realization that this is a question only Artifex can ask, as there are no other companies in the world that have both the extensive PDF knowledge and AI researchers/engineers working together.

Introducing PyMuPDF-Layout

Fast-forward one year of development, we've finally arrived at a stage where we can introduce this to the beta testers. We call this hybrid approach PyMuPDF-Layout.

Here's what makes PyMuPDF-Layout different: instead of treating documents as images and letting a massive (and possibly remote/offsite) neural network figure everything out, we start with what we already know about PDFs.

First, we use MuPDF's native PDF parsing to extract structured information: font statistics, line spacing patterns, character positions, text indentation, margins. These aren't guesses. These are precise measurements pulled directly from the PDF structure. Think of it as reading the document's DNA rather than just looking at its picture.

Then we feed this structured data into a Graph Neural Network. The GNN treats text boxes as nodes and their spatial relationships as edges. It takes our heuristic features (things like "this text is 14pt bold" or "these boxes are vertically aligned with 12pt spacing") and learns which patterns indicate titles, paragraphs, tables, or figures.

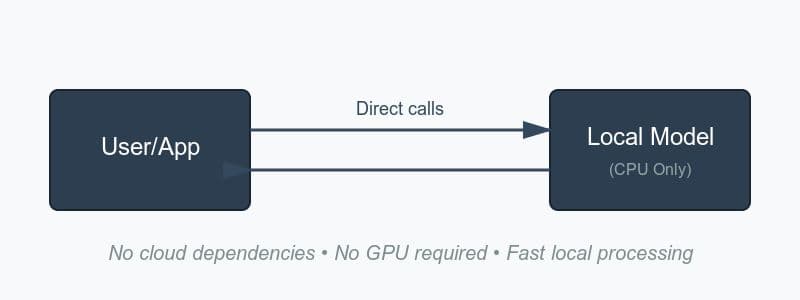

The key difference: VLM approaches process full-resolution page images through massive Vision Transformers that need GPU acceleration. PyMuPDF-Layout extracts features first (CPU-cheap), then runs a small GNN (also CPU-cheap). The heuristics do the heavy lifting upfront, so the model doesn't have to.

The result? We're 10 times faster than competing solutions while running entirely on CPU. No GPU costs, no cloud API dependencies, just fast local processing.

You can download this package from PyPI, or you can see the live demo.